Female politicians disadvantaged by online prejudices and stereotypes

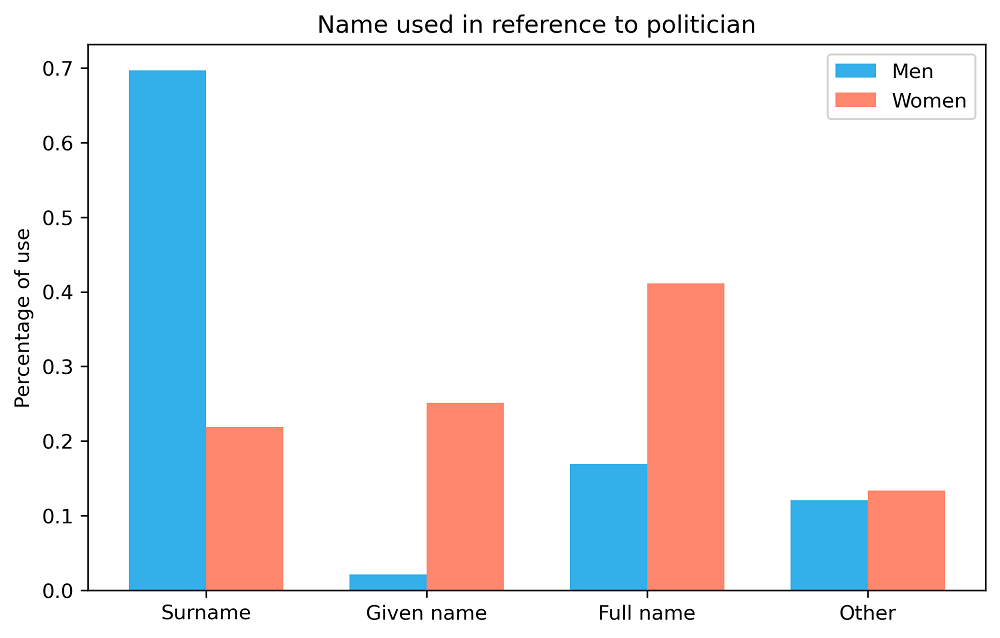

In Reddit comments, female politicians are more likely to be referred to by their first names, and with a less professional focus, than their male counterparts. This a new study from the University of Copenhagen finds. Previous work from the researchers investigated gender differences in search engine results and language models with the same main conclusion: gender bias is widespread online. According to the researchers, this disadvantages female politicians.

In the run up to both the Danish parliamentary and US mid-term elections, many first-time voters are heading online to find out more about political candidates. So far so good.

We see a clear trend of female politicians being more frequently referred to by their given names alone and described with language that relates to their body, clothing or family,

But gender bias – the prejudices and stereotypes about men and women that shape the way we regard both genders – permeates the internet and readily shapes information presented to voters.

Previous research projects from researchers at the University of Copenhagen’s Department of Computer Science have identified a food chain of gender bias online. That work is now further supported by their new study that investigates content on the social media website Reddit.

The new research shows significant gender bias in the way politicians are referred to and commented upon in Reddit discussions.This is evident from the study analyses, now published in Plos One.

A clear trend

"We see a clear trend of female politicians being more frequently referred to by their given names alone and described with language that relates to their body, clothing or family. This compared to male politicians, who are more often referred to by their surnames and described in words related to their profession," says Professor Isabelle Augenstein of the Department of Computer Science, one of the researchers behind the study.

The researchers analyzed a total of 10 million English-language comments in conversations about male and female politicians. The many comments were analyzed using statistical algorithms and several categories in which gender bias can be expressed.

About half of Reddit users, by far the largest share, are US based, followed by UK and Canadian users who represent 7.5% each. While 90% of the politicians mentioned in the study were also from the United States, the comments mentioned politicians from most countries in the world, including Denmark.

Conclusions in line with previous studies

The new study’s main conclusions are consistent with Augenstein's previous research on gender bias online. Research that, according to Professor Augenstein, identifies a feedback loop of prejudice and gender stereotypes on the internet.

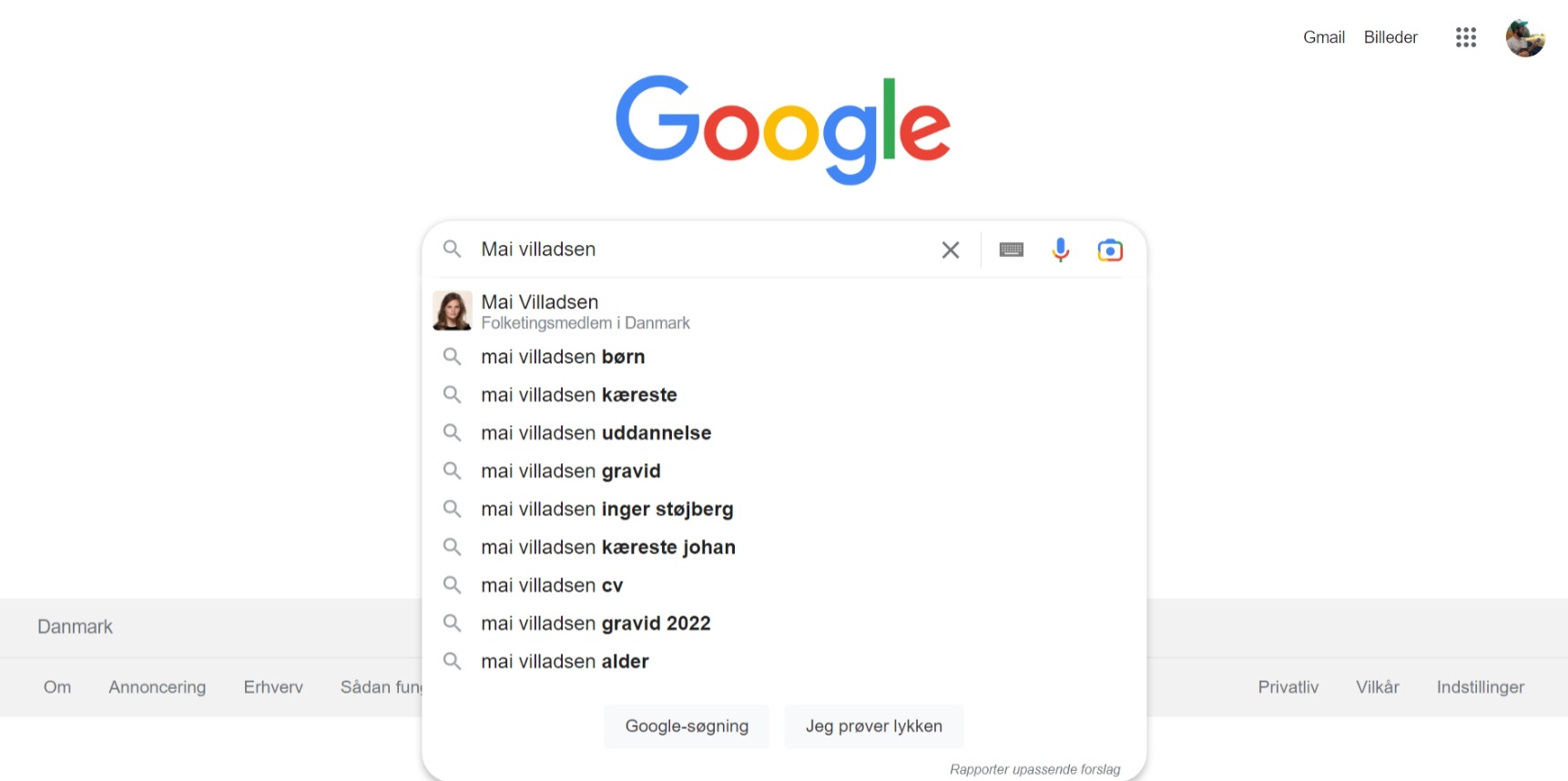

For information-seeking first-time voters, it begins with skewed names. Names typed into search engine boxes send voters down very different paths, depending on whether the given name is, for example, Mette or Lars, Hillary or Donald, etc.

In fact, a clear disparity becomes apparent in the box as soon as autocomplete suggestions are made by the search engine while a person is typing. The names of female politician more often provide suggestions of a less professional nature – about, for example, family relationships (boyfriend, children, etc.) than is the case for male politicians.

Search results diverge as well. From social media to digitized world literature, the imprints of women and men across the internet are different – and reflected in the language models upon which search engines depend, just as machine learning and AI are also influenced by these differences.

"There is a feedback loop where the gender stereotypes and prejudices that play out in society are included in digitized literature online, web articles and people's comments on social media, etc. These digital imprints then contribute to shaping the automated models and algorithms that prioritize internet content for us. And in this way, the loop is closed," explains Isabelle Augenstein.

She explains that language models reinforce biases, as algorithms are designed to look for dominant patterns in language and highlight them. This leads to a kind of distillation of bias. Here, users are presented with stereotypes that they are already familiar with, which can subsequently reinforce any prejudices that they might already harbor.

According to Augenstein, this ultimately affects internet users, and as a result, the conditions under which politicians interface with their electorate. A voter seeking information about a female politician will more often encounter less respectful terms or linkages that have nothing to do with the politician's professional role.

"A voter may have never considered the politician's family life or appearance, but when they are presented with that context, they may be tempted to check it out. If they click on the content, or share and comment on it using social media, the stereotypical narrative is reinforced, as the algorithms read this interaction as a success to be repeated," says Augenstein.

The digital footprints help shape the automated models and algorithms that prioritize the internet's content for us. As in this Google search on Danish politician for Enhedslisten, Mai Villadsen.

An uneven playing field for politicians

According to Professor Augenstein, the existence of differences can be consequential for female politicians.

"Our data show that the online conditions for politicians are not the same for men and women. Gender bias on the internet can come at a price for female politicians because their voter base is presented with these prejudices and stereotypes, and in contexts that are less respectful and less professional than male politicians," Augenstein says.

"First and foremost, this matters in the run up to an election. Here, gender biases can have an impact on what voters focus on with regards to a politician and the policies they are promoting. Ultimately, this can also incentivize female politicians to play along and assume the role if they are using web analytics and registering interest," she says.

Augenstein sees gender bias online as a clear example of the differences that exist between the sexes, and a place where these differences are quantifiable. However, as content is created by people, she sees it as obvious that the problem exists across society, in a broader sense – rather than being confined to the internet.

One yet to be investigated area in this line of research that the research group would like to work on next is bias affecting other societal groups, for example, based on social background, ethnicity and non-male or female cisgender.

Contact

Professor Isabelle Augenstein

Department of Computer Science

Copenhagen University

augenstein@di.ku.dk

+45 93 56 59 19

Ph.d. Karolina Ewa Stanczak

Department of Computer Science

Copenhagen University

ks@di.ku.dk

+45 23 84 00 97

Journalist og Press Contact

Kristian Bjørn-Hansen

Faculty of SCIENCE

Copenhagen University

kbh@science.ku.dk

+45 93 51 60 02